Data Hub

Break down organizational silos

Transform your scattered data sources into unified analytics power. Built on Trino and Apache Iceberg, Data Hub lets you query PostgreSQL, MongoDB, S3 and 30+ connectors as if they were a single database.

Powered by open standards

Trino

Distributed and stateless SQL engine. Query any data source with standard SQL, without moving your data.

Apache Iceberg

Open source table format for data lakes. ACID transactions, time travel, schema evolution and optimal performance.

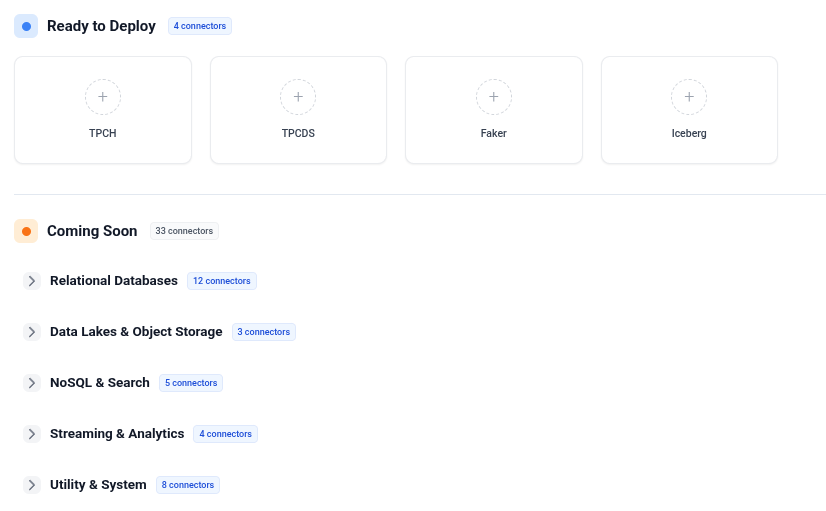

Available connectors

PostgreSQL

Relational databases

MySQL

Relational databases

MongoDB

NoSQL

S3 / MinIO

Object storage

Kafka

Streaming

Elasticsearch

Search

And many more coming... 30+ connectors planned.

Specifications

Connectors

30+ planned

SQL Engine

Distributed Trino

Storage

Apache Iceberg

Cross-source

Multi-source joins

Dataset Branching

Planned 2026

Auto Optimizer

Q4 2026

Use cases

Cross-source analytics

Join PostgreSQL with MongoDB in a single query

Query any sourceComplex KPI calculation

Advanced analytics on distributed datasets

10x faster than traditional ETLUnified lakehouse

All your data sources in one queryable platform

50% infra cost reductionIn action

The impossible join

Sarah needs to join customer data (PostgreSQL) with product interactions (MongoDB)

- 1 Traditional approach: Extract → Transform → Load (weeks of work)

- 2 Hyperfluid approach: One SQL query across both sources

- 3 SELECT * FROM postgres.customers JOIN mongo.interactions...

- 4 Results in seconds, without moving data

Complex analytics that took weeks, now in real-time

The KPI revolution

Finance team calculates monthly revenue from 8 different systems

- 1 Connect 8 systems via Data Hub connectors

- 2 Write SQL query covering all sources

- 3 Trino engine distributes computation automatically

- 4 Complex revenue calculation with correct attribution

Monthly reporting went from 2 weeks to 30 minutes

The time machine

Thanks to Iceberg, travel through time in your data (Planned 2026)

- 1 Create a dataset branch for Q3 analysis

- 2 Experiment with data transformations safely

- 3 Compare results with main branch

- 4 Merge successful changes or abandon experiments

Safe data experimentation without breaking production

Key benefits

Ready to unify your data sources?

Discover how Data Hub can eliminate your data silos.

Request a demo